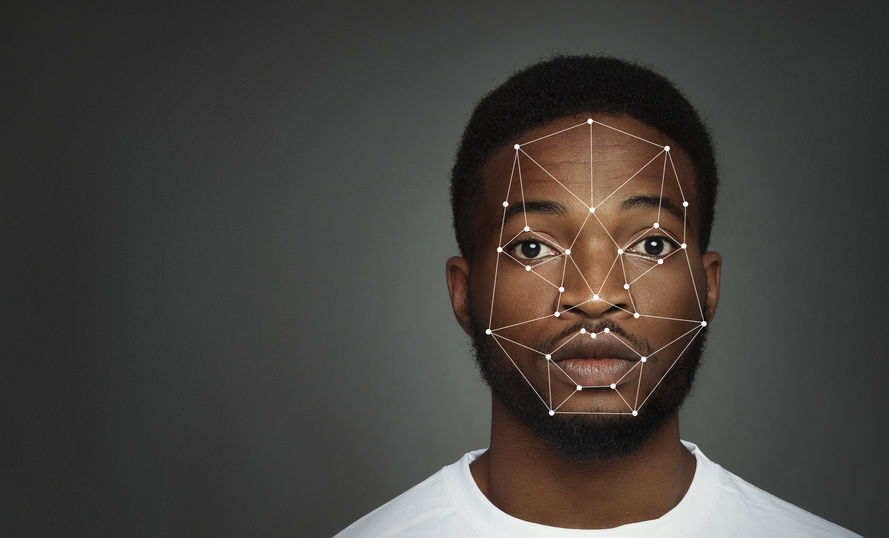

We’ve certainly covered facial recognition in the 4Cast before, but a newer subset of that tech is causing some major waves. Now, technology can not only match a name and a face, but it can also (in theory) determine how the person with that face is feeling. Called “affect recognition,” but more commonly referred to as “emotion recognition,” it may be even more controversial than it’s broader cousin.

- Emotion Recognition is as Creepy as Hell [Gizmodo] ” Emotion recognition technology, at best, promises to read commuters’ mental anguish and adjust subway cabin conditions accordingly, and at worst, puts biased and buggy mental microscopes in the hands of corporate overlords. In a new report, the NYU research center AI Now calls for regulators to ban the tech. “

- Emotion-detecting tech should be restricted by law – AI Now [BBC] “‘It’s being used everywhere, from how do you hire the perfect employee through to assessing patient pain, through to tracking which students seem to be paying attention in class. At the same time as these technologies are being rolled out, large numbers of studies are showing that there is… no substantial evidence that people have this consistent relationship between the emotion that you are feeling and the way that your face looks.'”

- The Risks of Using AI to Interpret Human Emotions [Harvard Business Review] ” Because of the subjective nature of emotions, emotional AI is especially prone to bias. For example, one study found that emotional analysis technology assigns more negative emotions to people of certain ethnicities than to others. Consider the ramifications in the workplace, where an algorithm consistently identifying an individual as exhibiting negative emotions might affect career progression.”

- AI “Emotion Recognition” Can’t Be Trusted [The Verge] “‘Companies can say whatever they want, but the data are clear,’ Lisa Feldman Barrett, a professor of psychology at Northeastern University and one of the review’s five authors, tells The Verge. ‘They can detect a scowl, but that’s not the same thing as detecting anger.'”

From the Ohio Web Library:

- Affective Computing Market Worth $90.0 Billion by 2024 – Exclusive Report by MarketsandMarkets™ ( PR Newswire. (2019, November 4). Affective Computing Market Worth $90.0 Billion by 2024 – Exclusive Report by MarketsandMarkets™. PR Newswire US. )

- Global Healthcare IT Market 2018-2022 | Emergence of AI-enabled Emotion Recognition Technologies to Boost Growth ( echnavio Research. (9AD, Spring 2018). Global Healthcare IT Market 2018-2022 | Emergence of AI-enabled Emotion Recognition Technologies to Boost Growth | Technavio. Business Wire (English). )

- Decoded: A Scientist’s Quest To Reclaim Our Humanity by Bringing Emotional Intelligence to Technology ( Hoffert, B. (2019). Decoded: A Scientist’s Quest To Reclaim Our Humanity by Bringing Emotional Intelligence to Technology. Library Journal, 144(10), 64. )